Unspoken Word: a Creative Springboard Musical Tool

Unspoken Word is an open source software project that aims to allow broad audiences to access music making. This is done through analysis of spoken word, which can then be converted into music and controlled by the user. The project, which was supported throughout by the AudioCommons team, was jointly conceived and produced by Queen Mary Media & Arts Technology PhD students Jorge del Bosque and Lizzie Wilson as part of a collaboration with Henry Cooke and Tim Cowlishaw at BBC R&D.

HOW DOES IT WORK?

When the main script is launched running the Terminal, the computer waits for the user to enunciate a brief poem or a short sentence. The software converts the spoken utterance to a text and analyses the semantic features of the transcript. The semantic features are then mapped to the parameters of a generative music system which will create music that it related to the semantic text. Finally, a mixing interface populated with sound channels pops out for the user to create their own song or soundscape.

Unspoken Words Interface

There are nine channels, the first four with a variety of sounds from different digital musical instruments that are generated using random walks on a Markov Chain. There are also five more channels with sounds that are then retrieved from the Freesound database using API queries, made possible by Freesound integration into Supercollider.

a) The sentiment and magnitude of the transcript determines the tempo.

b) Combined values of sentiment and magnitude generate the key centre (based on Christian Schubart’s key characteristics and valence model of emotion).

c) Concreteness or abstractness define the timbre of the sounds used.

d) Nouns and Adjectives query the Freesound library and allocate one sound file per channel (up to 5).

TECHNOLOGIES

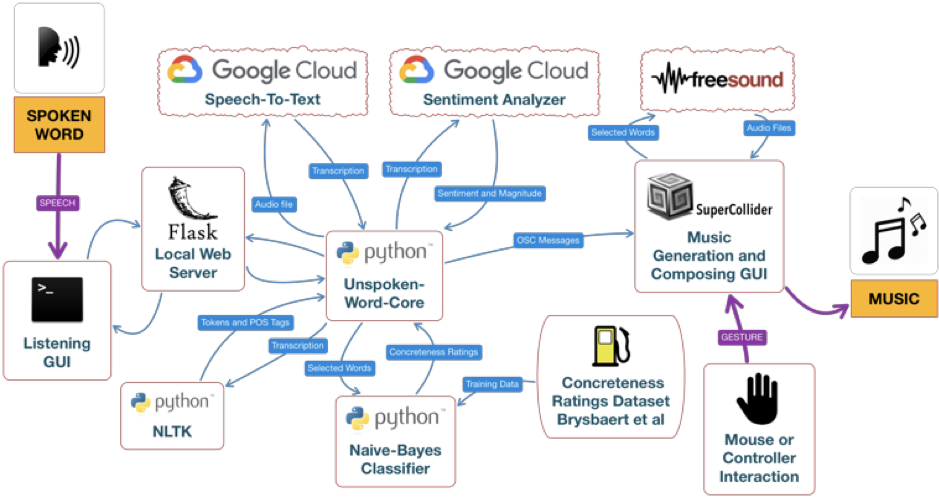

The software was developed combining the following programming languages and tools: Python, NLTK, Google Cloud Speech to Text, Google Cloud Sentiment Analyzer, Flask, SuperCollider, FreeSound.

Unspoken Words Functional Diagram

ARS Electronica

An implementation of the software was recently embedded in an installation exhibited at ARS Electronica 2018 (Linz, Austria).

Unspoken Word @ArsElectronica -exploring the hidden codes inside spoken language and fertilizing the music composition process- An installation I built with @dgtlslvs part of our APP project @QMUL_MAT with @BBCRD and with crucial help and support from @prehensile and @mistertim pic.twitter.com/0ebdDwH17f

— Jorge del Bosque (@delbosque) September 12, 2018

The festival takes place annually in September, and this year Queen Mary University’s interdisciplinary Media and Arts Technology PhD programme were invited to demo their interactive installations. This meant that Unspoken Word was allowed to travel to Austria for the week and that the tools that were developed here were played with by the thousands of visitors to the festival. Some great feedback and future developments were suggested!

Concept Picture for Ars Electronica

Acknowledgements

Lizzie Wilson and Jorge del Bosque are supported by EPSRC and AHRC Centre for Doctoral Training in Media and Arts Technology (EP/L01632X/1). Jorge del Bosque is also supported by the Mexican Council of Science and Technology (CONACyT).

The image in the cover is reposted from this page and should be credited as: Unspoken Word / Jorge del Bosque (MX), Lizzie Wilson (UK). Credit: vog.photo for Ars Electronica.